- Gpu z memory usage dedicated vs dynamic how to#

- Gpu z memory usage dedicated vs dynamic code#

- Gpu z memory usage dedicated vs dynamic free#

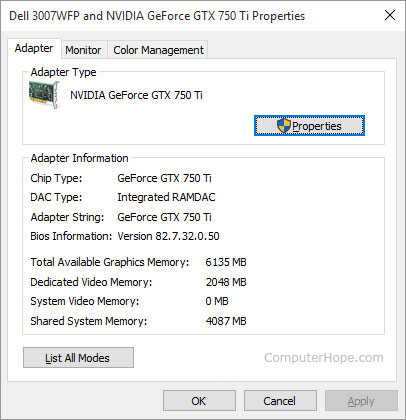

Am I just missing something Is this not possible with the GTX 970 Or do I need to just monitor Memory Usage, and see if this peaks. We show you the benefits of this extra verbosity later in this post. Hi, I need to log dedicated and dynamic memory usage for benchmarking purposes, but this version of GPU-z doesnt seem to have the options with my graphics card. These functions are much more verbose and require more upfront knowledge of how the application uses the allocation. Dedicated Ram refers to the memory actually in use by the video card. The earlier sections in this post went through an alternative implementation of cudaMalloc using the CUDA virtual memory management functions. In old versions of GPU-Z Dynamic Ram refers to the amount of memory reserved for use by the video card, whether needed or not. While we don’t go into too much detail about these functions here, you can look at the CUDA samples as well as the examples referenced in this post to see how they all work together.

Gpu z memory usage dedicated vs dynamic code#

The following code example shows what that looks like: cuMemUnmap(ptr, size) When you are done with the VA range, cuMemAddressFree returns it to CUDA to use for other things.įinally, cuMemRelease invalidates the handle and, if there are no mapped references left, releases the backing store of memory back to the operating system. To unmap a mapped VA range, call cuMemUnmap on the entire VA range, which reverts the VA range back to the state it was in just after cuMemAddressReserve.

Gpu z memory usage dedicated vs dynamic free#

Of course, all the functions described so far have corresponding free functions. Now, you can access any address in the range from the current device without a problem. Look at the following simple C++ class that describes a vector that can grow: class Vector = CU_MEM_LOCATION_TYPE_DEVICE ĪccessDesc.flags = CU_MEM_ACCESS_FLAGS_PROT_READWRITE ĬuMemSetAccess(ptr, size, &accessDesc, 1) If you have ever used libc’s realloc function, or C++’s std::vector, you have probably run into this yourself. What you really want is to grow the allocation as you need more memory, yet maintain the contiguous address range that you always had. You need a larger allocation but you can’t afford the performance and development cost of pointer-chasing through a specialized dynamic data-structure from the GPU. There are plenty of applications where it’s just hard to guess how big your initial allocation should be.

Gpu z memory usage dedicated vs dynamic how to#

In this post, we explain how to use the new API functions and go over some real-world application use cases. Yeah, but unfortunately I have the version without the 4k screen :/ I used GPU-Z with these results: GPU core clock: 1097.1 MHz. Before CUDA 10.2, the number of options available to developers has been limited to the malloc-like abstractions that CUDA provides.ĬUDA 10.2 introduces a new set of API functions for virtual memory management that enable you to build more efficient dynamic data structures and have better control of GPU memory usage in applications. There is a growing need among CUDA applications to manage memory as quickly and as efficiently as possible.

0 kommentar(er)

0 kommentar(er)